As we approach the third anniversary of Panama Papers, the gigantic financial leak that brought down two governments and drilled the biggest hole yet to tax haven secrecy, I often wonder what stories we missed.

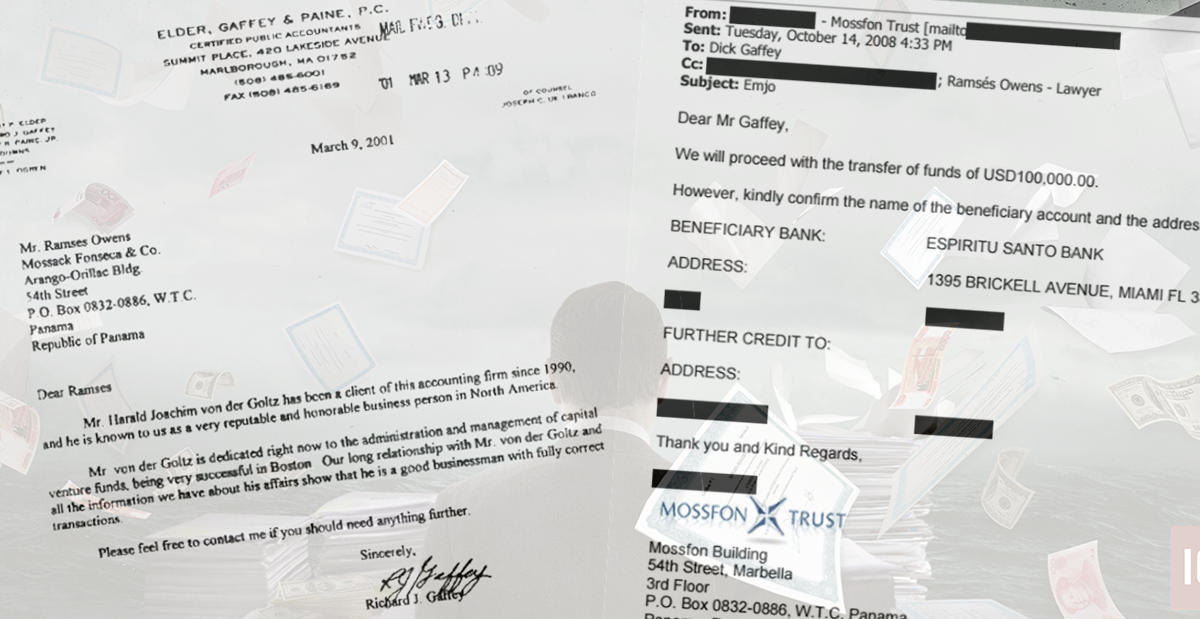

Panama Papers provided an inspiring example of media collaboration across borders and using open-source technology at the service of reporting. As one of my colleagues put it: “You basically had a gargantuan and messy amount of data in your hands and you used technology to distribute your problem — to make it everybody’s problem.” He was referring to the 400 journalists, including himself, who for more than a year worked together in a virtual newsroom to unravel the mysteries hidden in the trove of documents from the Panamanian law firm Mossack Fonseca.

Those reporters used open-source data mining technology and graph databases to wrestle 11.5 million documents in dozens of different formats to the ground. Still, the ones doing the great majority of the thinking in that equation were the journalists. Technology helped us organize, index, filter and make the data searchable. Everything else came down to what those 400 brains collectively knew and understood about the characters and the schemes, the straw men, the front companies and the banks that were involved in the secret offshore world.

If you think about it, it was still a highly manual and time-consuming process. Reporters had to type their searches one by one in a Google-like platform based on what they knew.

What about what they didn’t know?

Fast-forward three years to the booming world of machine learning algorithms that are changing the way humans work, from farming to medicine to the business of war. Computers learn what we know and then help us find unforeseen patterns and anticipate events in ways that would be impossible for us to do on our own.

What would our research look like if we were to deploy machine learning algorithms on the Panama Papers? Can we teach computers to recognize money laundering? Can an algorithm differentiate a legitimate loan from a fake one designed to shuffle money among entities? Could we use facial recognition to more easily pinpoint which of the thousands of passport copies in the trove belong to elected politicians or known criminals?

The answer to all of that is yes. The bigger question is how might we democratize those AI technologies, today largely controlled by Google, Facebook, IBM and a handful of other large companies and governments, and fully integrate them into the investigative reporting process in newsrooms of all sizes?

One way is through partnerships with universities. I came to Stanford last fall on a John S. Knight Journalism Fellowship to study how artificial intelligence can enhance investigative reporting so we can uncover wrongdoing and corruption more efficiently.

Democratizing Artificial Intelligence

My research led me to Stanford’s Artificial Intelligence Laboratory and more specifically to the lab of Prof. Chris Ré, a MacArthur genius grant recipient whose team has been producing cutting-edge research on a subset of machine learning techniques called “weak supervision.” The lab’s goal is to “make it faster and easier to inject what a human knows about the world into a machine learning model,” explains Alex Ratner, a Ph.D. student who leads the lab’s open source weak supervision project, called Snorkel.

The predominant machine learning approach today is supervised learning, in which humans spend months or years hand-labeling millions of data points individually so computers can learn to predict events. For example, to train a machine learning model to predict whether a chest X-ray is abnormal or not, a radiologist might hand-label tens of thousands of radiographs as “normal” or “abnormal.”

The goal of Snorkel, and weak supervision techniques more broadly, is to let ‘domain experts’ (in our case, journalists) train machine learning models using functions or rules that automatically label data instead of the tedious and costly process of labeling by hand. Something along the lines of: “If you encounter problem x, tackle it this way.” (Here’s a technical description of Snorkel).

“We aim to democratize and speed up machine learning,” Ratner said when we first met last fall, which immediately got me thinking about the possible applications to investigative reporting. If Snorkel can help doctors quickly extract knowledge from troves of x-rays and CT scans to triage patients in a way that makes sense — rather than patients languishing in queue — it can likely also help journalists find leads and prioritize stories in Panama Papers-like situations.

Ratner also told me that he wasn’t interested in “needlessly fancy” solutions. He aims for the fastest and simplest way to solve each problem.

In early January, my newsroom, the International Consortium of Investigative Journalists, and Re’s Stanford lab launched a collaboration that seeks to enhance the investigative reporting process. To honor the “nothing needlessly fancy” principle, we call it Machine Learning for Investigations.

For journalists, the appeal of collaborating with academics is twofold: access to tools and techniques that can aid our reporting, and the absence of commercial purpose in the university setting. For academics, the appeal is the “real world” problems and datasets journalists bring to the table and, potentially, new technical challenges.

Here are lessons we learned so far in our partnership:

Pick an AI lab with “real world” applications track record.

Chris Ré’s lab, for example, is part of a consortium of government and private sector organizations that developed a set of tools designed to “light up” the Dark Web. Using machine learning, law enforcement agencies were able to extract and visualize information — sometimes hidden inside images — that helped them go after human trafficking networks that thrive in the Internet. Searching the Panama Papers is not that different from searching the depths of the Dark Web. We have a lot to learn from the lab’s previous work.

Make sure there are incentives on both sides.

There are many civic-minded AI scientists concerned about the state of democracy who would like to help journalists do world-changing reporting. But for a partnership to last and be productive, it helps if there is a technical challenge academics can tackle, and if the data can be reproduced and published in an academic setting. Sort out early in the relationship if there’s goal alignment and what the trade-offs are. For us, it meant focusing first on a public data medical investigation because it fit well with research Ré’s lab was already doing to help doctors anticipate when a medical device might fail. The partnership is helping us build on the machine learning work the ICIJ team did last year for the award-winning Implant Files investigation, which exposed gross lack of regulation of medical devices worldwide.

Choose useful, not fancy.

There are problems for which we don’t need machine learning at all. So how do we know when AI is the right choice? John Keefe, who leads Quartz AI Studio, says machine learning can help journalists in situations where they know what information they are looking for in large amounts of documents but finding it would take too long or would be too hard. Take the examples of Buzzfeed News’ 2017 spy planes investigation in which a machine learning algorithm was deployed on flight-tracking data to identify surveillance aircraft (here the computer had been taught the turning rates, speed and altitude patterns of spy planes), or the Atlanta Journal Constitution probe on doctors’ sexual harassment, in which a computer algorithm helped identify cases of sexual abuse in more than 100,000 disciplinary documents. I am also fascinated by the work of Ukrainian data journalism agency Texty, which used machine learning to uncover illegal sites of amber mining through the analysis of 450,000 satellite images.

‘Reporter in the loop’ all the way through.

If you are using machine learning in your investigation, make sure to get buy in from reporters and editors involved in the project. You may find resistance because newsroom AI literacy is still quite low. At ICIJ, research editor Emilia Diaz-Struck has been the “AI translator” for our newsroom, helping journalists understand why and when we may choose to use machine learning. “The bottom line is that we use it to solve journalistic problems that otherwise wouldn’t get solved,” she says. Reporters play a big role in the AI process because they are the ‘domain experts’ that the computer needs to learn from — the equivalent to the radiologist who trains a model to recognize different levels of malignancy in a tumor. In the Implant Files investigation, reporters helped train a machine learning algorithm to systematically identify death reports that were misclassified as injuries and malfunctions, a trend first spotted by a source who tipped the journalists.

It’s not magic!

The computer is augmenting the work of a journalist not replacing it. The AJC team read all the documents associated to the more than 6,000 doctor sex abuse cases it found using machine learning. ICIJ fact-checkers manually reviewed each of the 2,100 deaths the algorithm uncovered. “The journalism doesn’t stop, it just gets a hop,” says Keefe. His team at Quartz recently received a grant from the Knight Foundation to partner with newsrooms on machine learning investigations.

Share the experience so others can learn. In this area, journalists have much to learn from the academic tradition of building on one another’s knowledge and openly sharing results, both good and bad. “Failure is an important signal for researchers,” says Ratner. “When we work on a project that fails, as embarrassing as it is, that’s often what kicks off multiyear research projects. In these collaborations, failure is something that should be tracked and measured and reported.”

So yes, you will be hearing from us either way!

There’s a ton of serendipity that can happen when two different worlds come together to tackle a problem. ICIJ’s data team has now started to collaborate with another part of Ré’s lab that specializes in extracting meaning and relationships from text that is “trapped” in tables and other strange formats (think SEC documents or head-spinning charts from ICIJ’s Luxembourg Leaks project).

The lab is also working on other more futuristic applications, such as capturing natural language explanations from domain experts that can be used to train AI models (It’s appropriately called Babble Labble) or tracing radiologists’ eyes when they read a study to see if those signals can also help train algorithms.

Perhaps one day, not too far in the future, my ICIJ colleague Will Fitzgibbon will use Babble Labble to talk the computer’s ear off about his knowledge of money laundering. And we will trace my colleague Simon Bowers’ eyes when he interprets those impossible, multi-step charts that, when unlocked, reveal the schemes multinational companies use to avoid paying taxes.

In the meantime, we stay real. Nothing needlessly fancy.

Are you a journalist with a story idea or data that would benefit from machine learning? Are you a machine learning expert interested in collaborating with journalists? Reach out to me and let’s chat about ways to collaborate: marinawg@stanford.edu @MarinaWalkerG

The following people are part of the Machine Learning for Investigations partnership between ICIJ and Stanford: Alex Ratner, Jason Fries, Jared Dunnmon, Mandy Lu, Alison Callahan, Emilia Diaz-Struck, Rigoberto Carvajal, Sen Wu.